Hi everyone in the community,

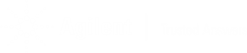

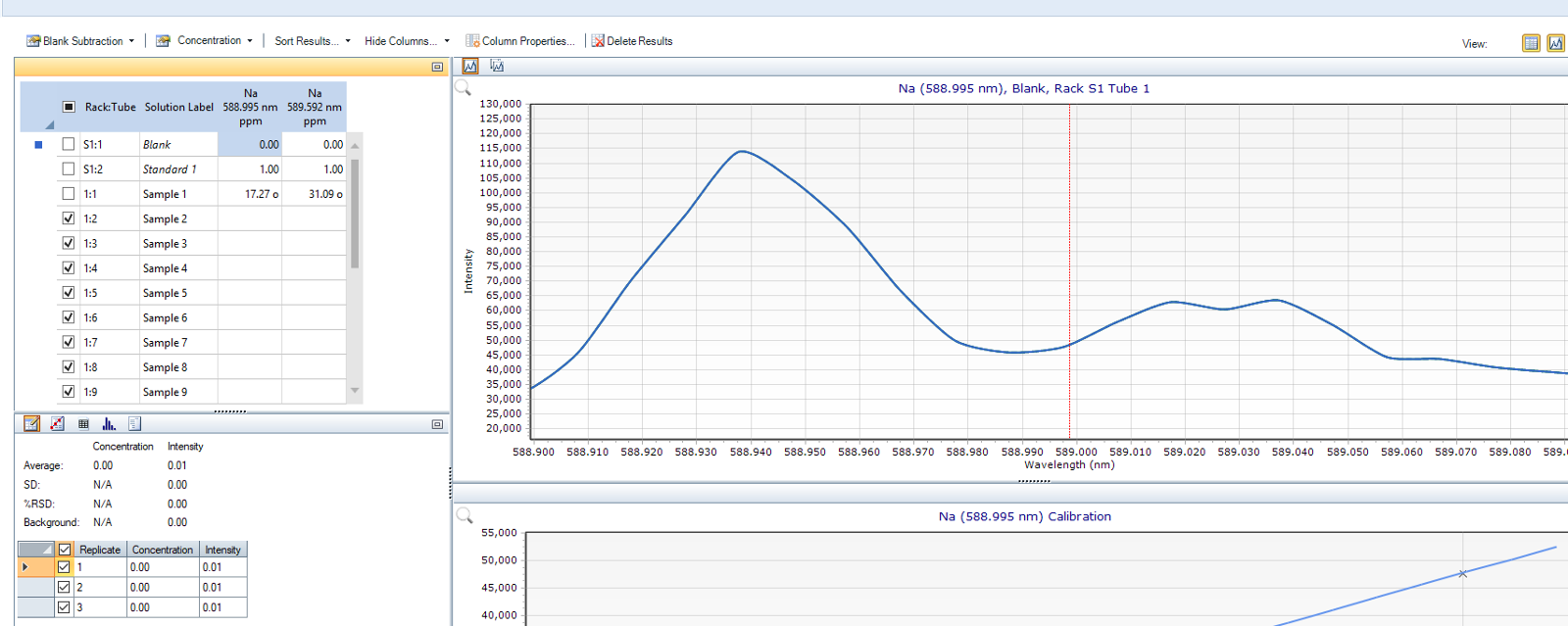

While I was performing an analysis with the MP-AES I noticed that the linearity of my calibration curve for zinc was quite high (R=0.997) when I used the auto background correction feature in the MP Expert software compared to the non-linearity (R=0.53) I observed when background correction was turned off so that I could look at the raw data.

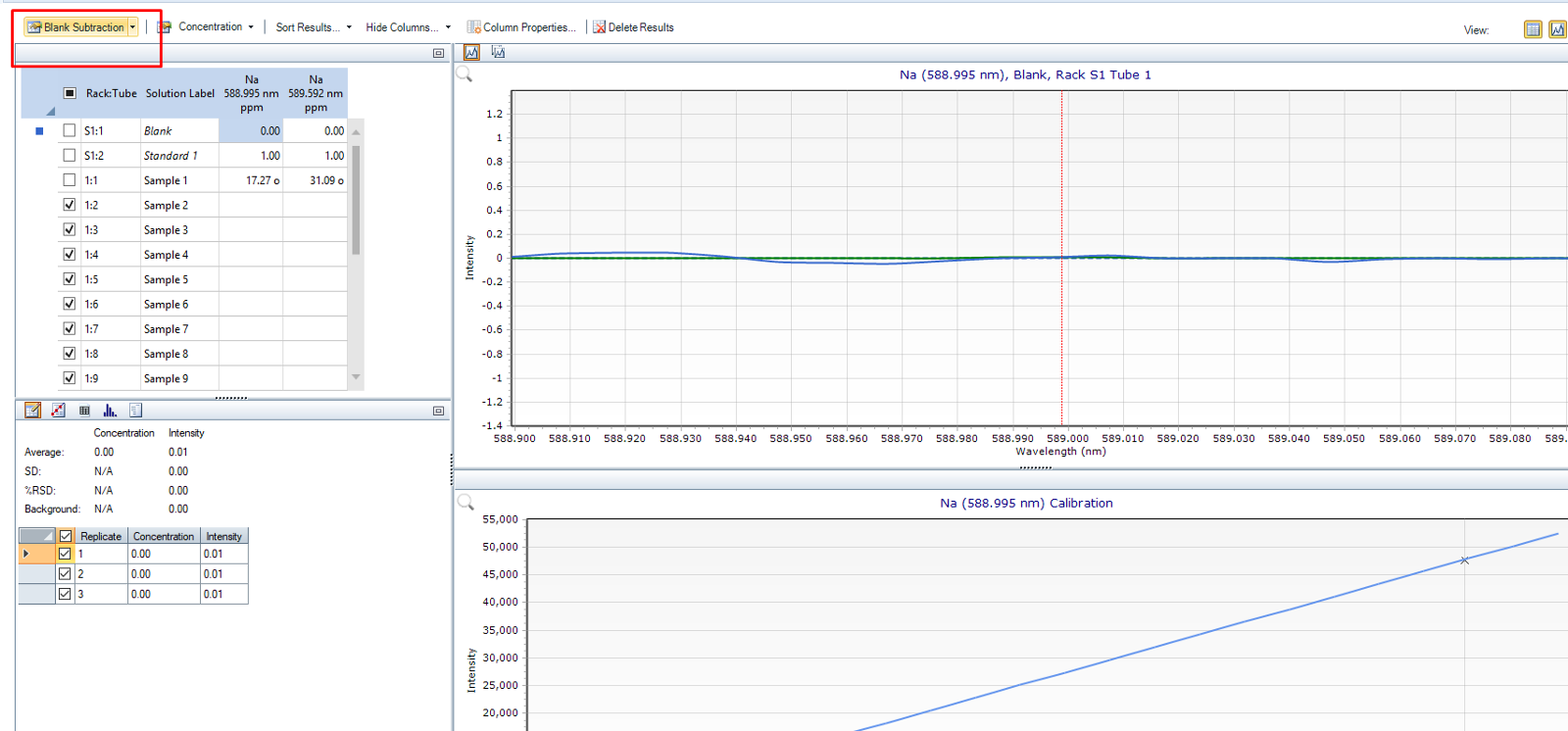

My question is how does the auto background correction work to reduce interference and noise to isolate the signal so adequately?

The description in the software states that it calculates the concentration using the total intensity but is there any more to this fact? I've searched online but also couldn't find an answer.

I would greatly appreciate some feedback!

Thanks all,

Shane Grant