Hi All,

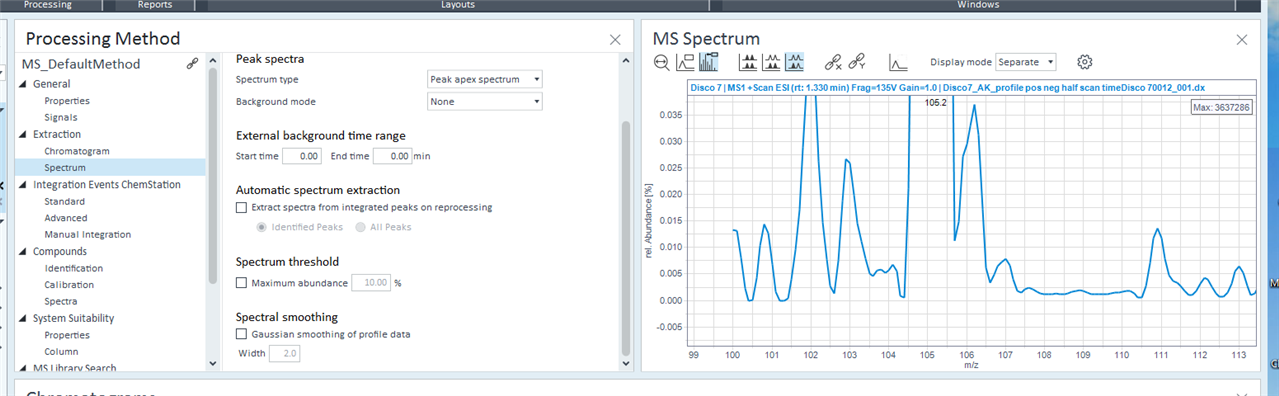

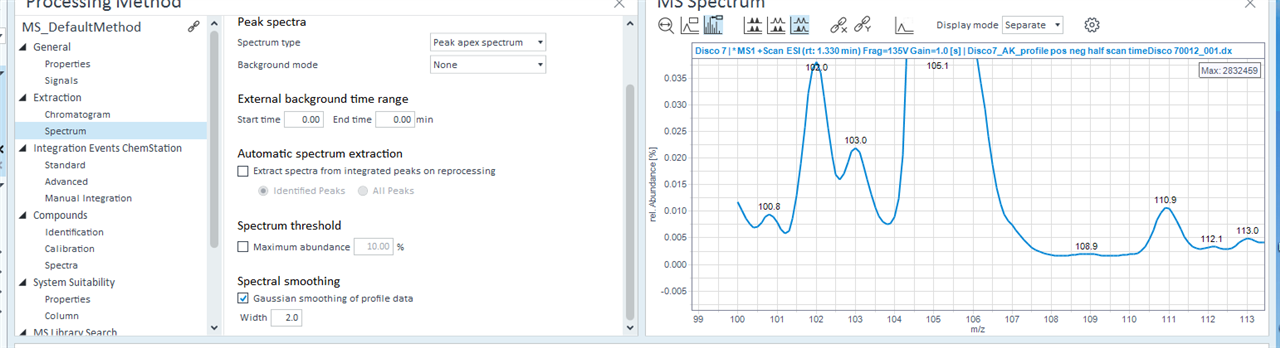

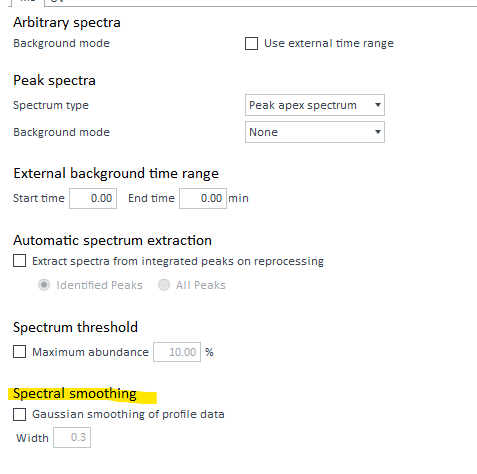

I am trying to do a simple Average MS Extraction on data collected using a SQ MSD (G6135C, I believe), with a background subtraction and Gaussian smoothing using OL2.7 build 2.207.0.801

When I perform the extraction, my method has Gaussian smoothing set to 2.0, Use External time range for background mode is selected and a time is given.

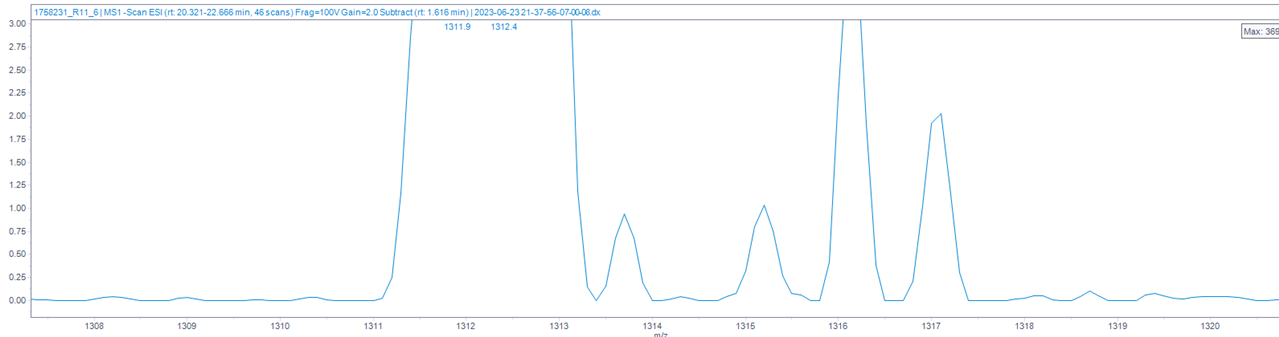

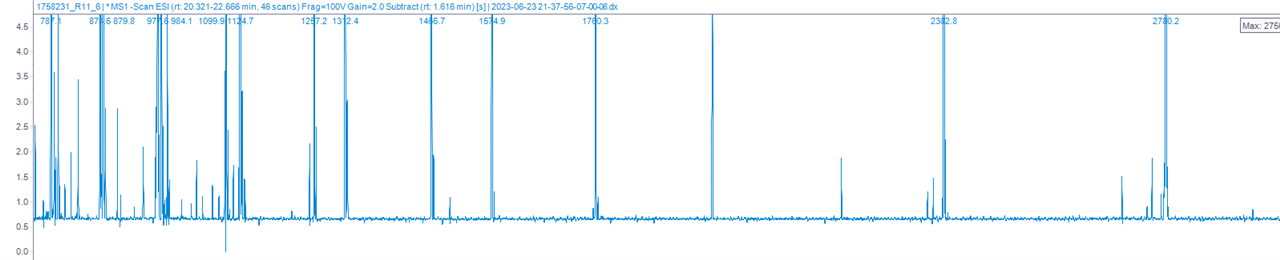

When I initially extract the spectrum, the baseline is flat and is at 0.00%. However, the data are not smoothed. I reprocess the injection to smooth the spectrum. Now the baseline is raised anywhere from 0.05% and up. I am including an example where smoothing the data causes the baseline to shift to ~0.70%. I know that the 'reprocess' is performing some sort of background subtraction to cause this raised baseline, but the only difference between the spectra displayed below is the Gaussian smoothing. Once the baseline is raised, I can not figure out any setting which I can change (including removing gaussian smoothing, removing background subtraction, changing the background subtraction...) in order to get the baseline back down to 0.

I believe I am making a mistake in my processing method, I don't know where/how. Any help would be greatly appreciated!

thanks,

brij

Figures:

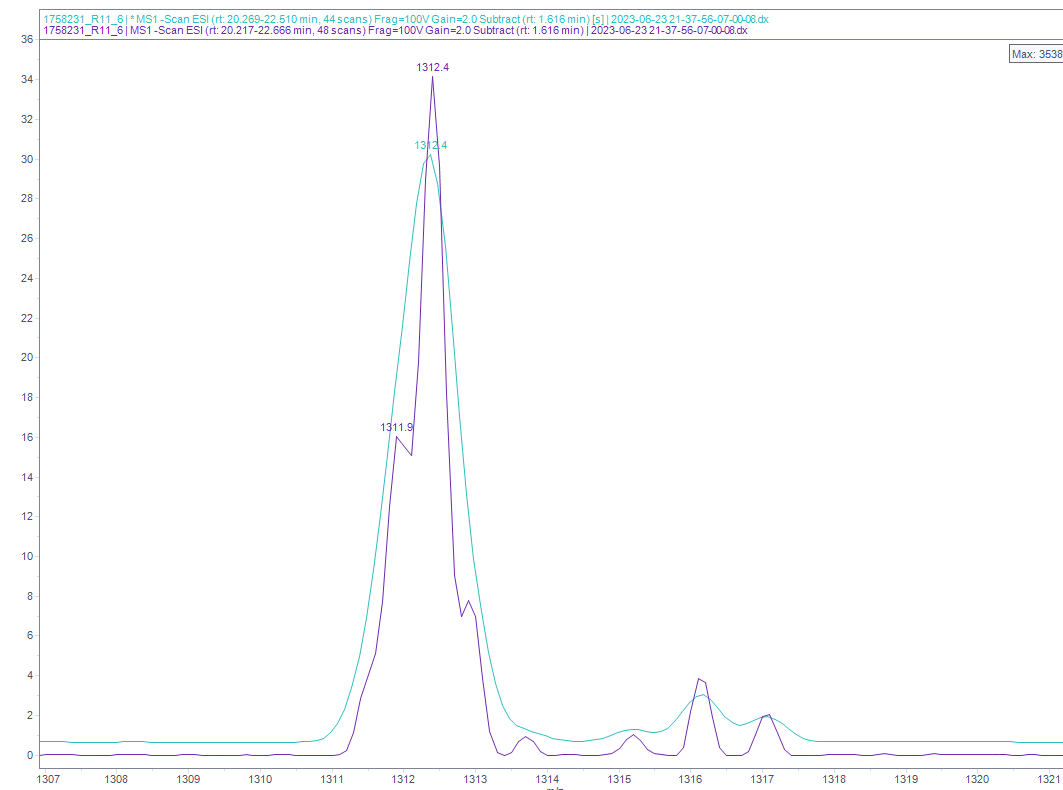

Initial Extraction zoomed in, flat baseline at 0.00%:

Reprocessed to smooth (Gaussian factor: 2.0), baseline raised to 0.66%:

Zoomed out after smoothing to show baseline dips causing the baseline to be at 0.66%:

Overlaid: